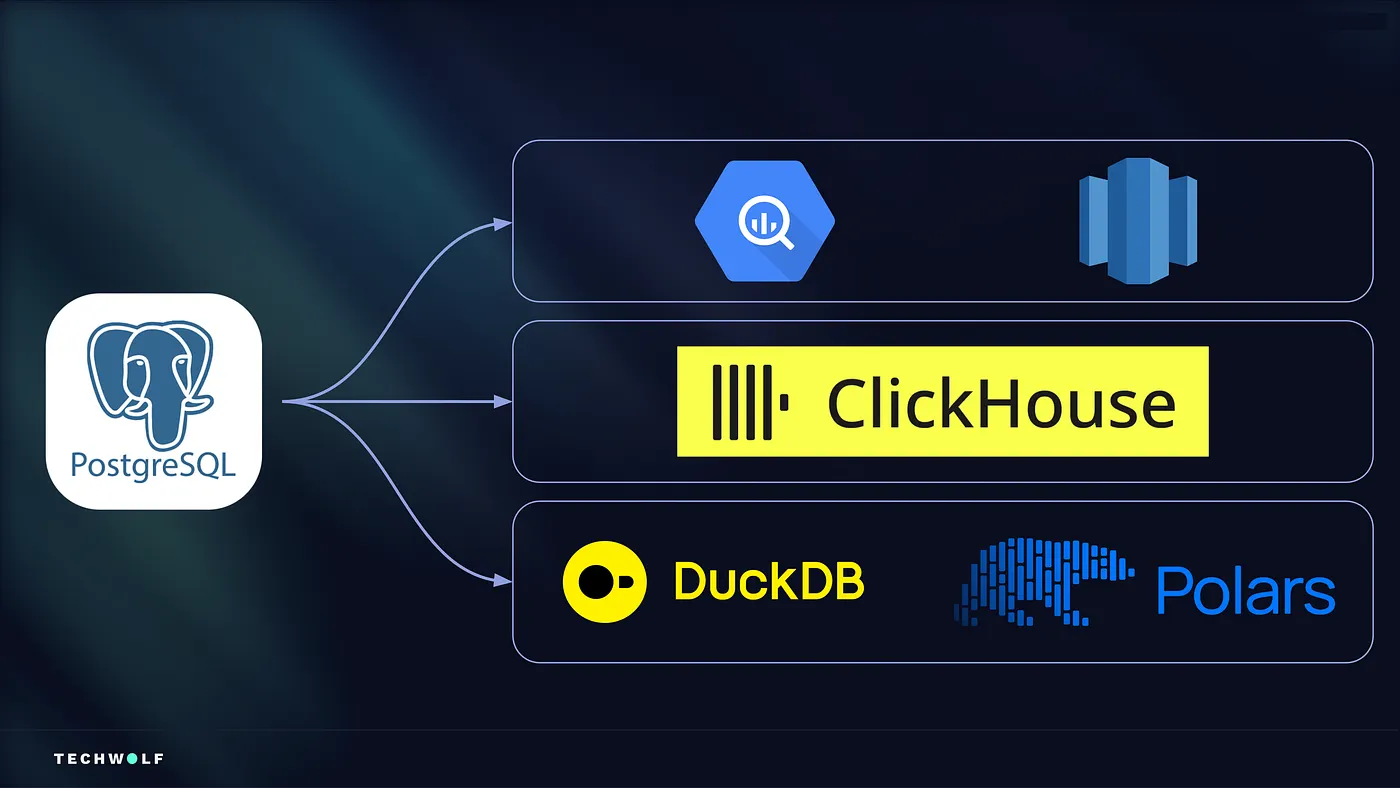

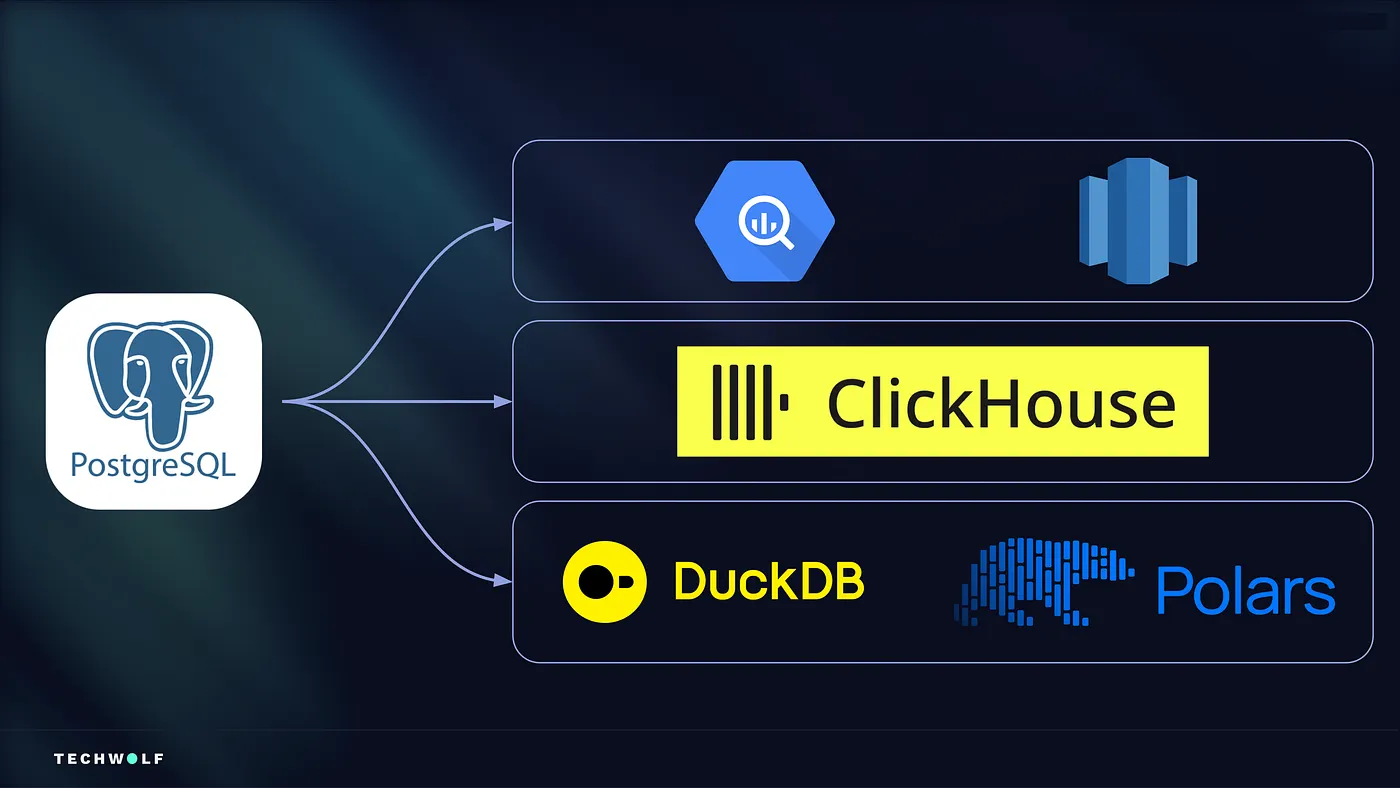

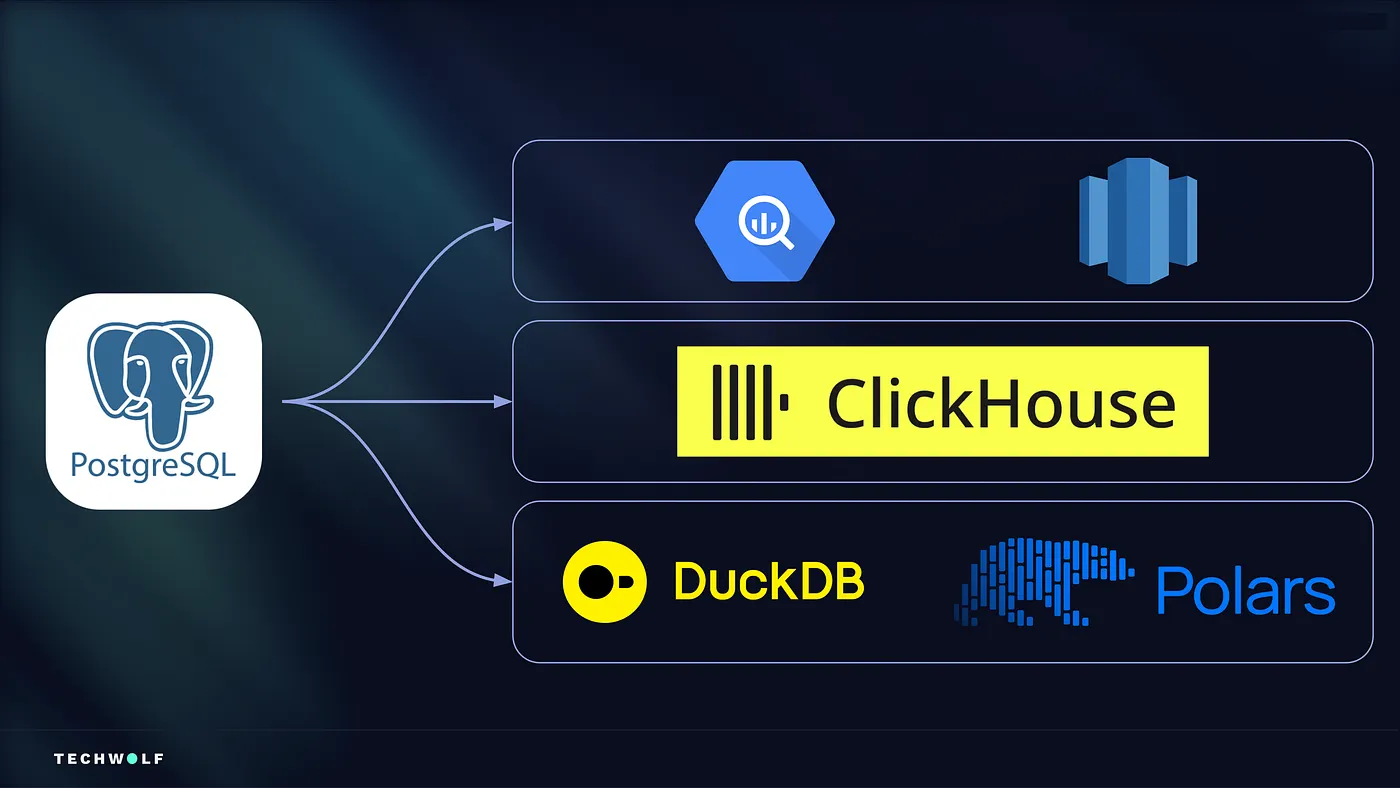

Real-time analytics, 1000× faster: our journey from Postgres to ClickHouse

At TechWolf we want exploring skills data to feel like a conversation: you ask a tough question ,"Who has skill X at level Y, in this org and region?”, and the answer pops up immediately so you can keep thinking.

Our Postgres setup was great for transactions but too slow for this kind of slice-and-dice analytics; joins piled up, queries took minutes, and the UI lost its flow. So we rethought the engine around speed and simplicity: a columnar model in ClickHouse that keeps the fields people actually filter by in one place, with ClickHouse doing the heavy aggregations and a light post-processing step to shape the result. The payoff is sub-second responses on tens of millions of skill facts, turning “come back later” into “what else can we learn?” Along the way we made pragmatic calls (self-hosting on Kubernetes, choosing ClickHouse Keeper, being explicit about consistency trade-offs) to keep things stable today and ready for streaming tomorrow.

What we built

- A columnar home for analytics. We shaped a wide ClickHouse table that already contains the fields people slice by (skills, clusters, domains, org metadata). It keeps joins to a minimum and takes advantage of serious compression, some columns shrink by >200×, which is a big part of why it feels instant.

- Split the work where it shines. ClickHouse handles the heavy distinct counts; we do the final JSON shaping with Polars. That small change alone shaved roughly 100 ms off the response time.

- Simple sync for now, streaming ready later. Skills don’t change second-to-second, so a daily full sync is perfectly fine today, and leaves a clean path to streaming when it’s needed.

- Self-hosted, made sane. We run ClickHouse on Kubernetes with Altinity’s operator and use ClickHouse Keeper (the modern ZooKeeper-compatible coordinator) after early pains with ZooKeeper. It’s been the steadier choice.

- Clear about trade-offs. When we need read-your-own-writes, we enable sequential consistency and accept the small latency tax. Calling this out explicitly kept us honest about UX vs. guarantees.

- Cost/perf that scales predictably. Benchmarks told us our workload is compute-bound and scales almost linearly with vCPUs. We landed on c7i.2xlarge: ~300 ms for single queries and <2 s under concurrency, with gp3 storage working just fine.

Read the full blogpost on Medium

Blog

From guides to whitepapers, we’ve got everything you need to master job-to-skill profiles.

Real-time analytics, 1000× faster: our journey from Postgres to ClickHouse

Meet LAIQA: our first step towards an event-driven architecture

.svg.png)